References

- Artificial Intelligence: Look Ma, No Hands!

- Stanford University CS 229 Machine Learning

- Stanford University CS231n: Convolutional Neural Networks for Visual Recognition

- Machine Learning: The Basics, with Ron Bekkerman

- Machine Learning for Big Data in the Cloud

- GraphLab vs. Piccolo vs. Spark

Basics

- Supervised Learning (Classification): is powerful when the classifications are known to be correct for instance, when dealing with diseases.

- Unsupervised Learning (Clustring): can be useful to find hidden structure in unlabeled data.

- Reinforcement Learning (Regression): can provide powerful tools which allow agents to adopt and improve quickly, even in complex scenarios such as strategy games. It interacts with its environment in discrete time steps.

AI in Steps

Most common steps towards creating artificial intelligence are -

- Know the Domain, what you are solving for

- Study the data — Data Mining

- Cleanse , Normalize Data, develop tools

- Choose a Model

- Test with Few Models —> Shortlist the Optimum Models — >Pick the best Model

- Train/Fine Tune/AB Test The Model

- Correct If Model Overfitting or Underfitting

- Quantify The Model — Monitoring Errors, Learnings, Positive Impact

Model Selection

Based on the understanding of the domain you are solving for and data knowledge, one is well equipped to select models that would work best. Some examples -

- Supervised Learning

- k-Nearest Neighbors

- Linear Regression/Polynomial Regression

- Support Vector Machines (SVM)

- Decision Trees, Random Forests

- Unsupervised Learning

- Clustering

- k-Means

- Hierarchical Cluster Analysis (HCA)

- Expectation Maximization

- Semi-supervised Learning

- Reinforcement Learning

- Deep Learning

- Recurrent Neural Networks

Library Selection

There are readily available algorithms for different modes of machine learning in different languages, platforms.Some examples -

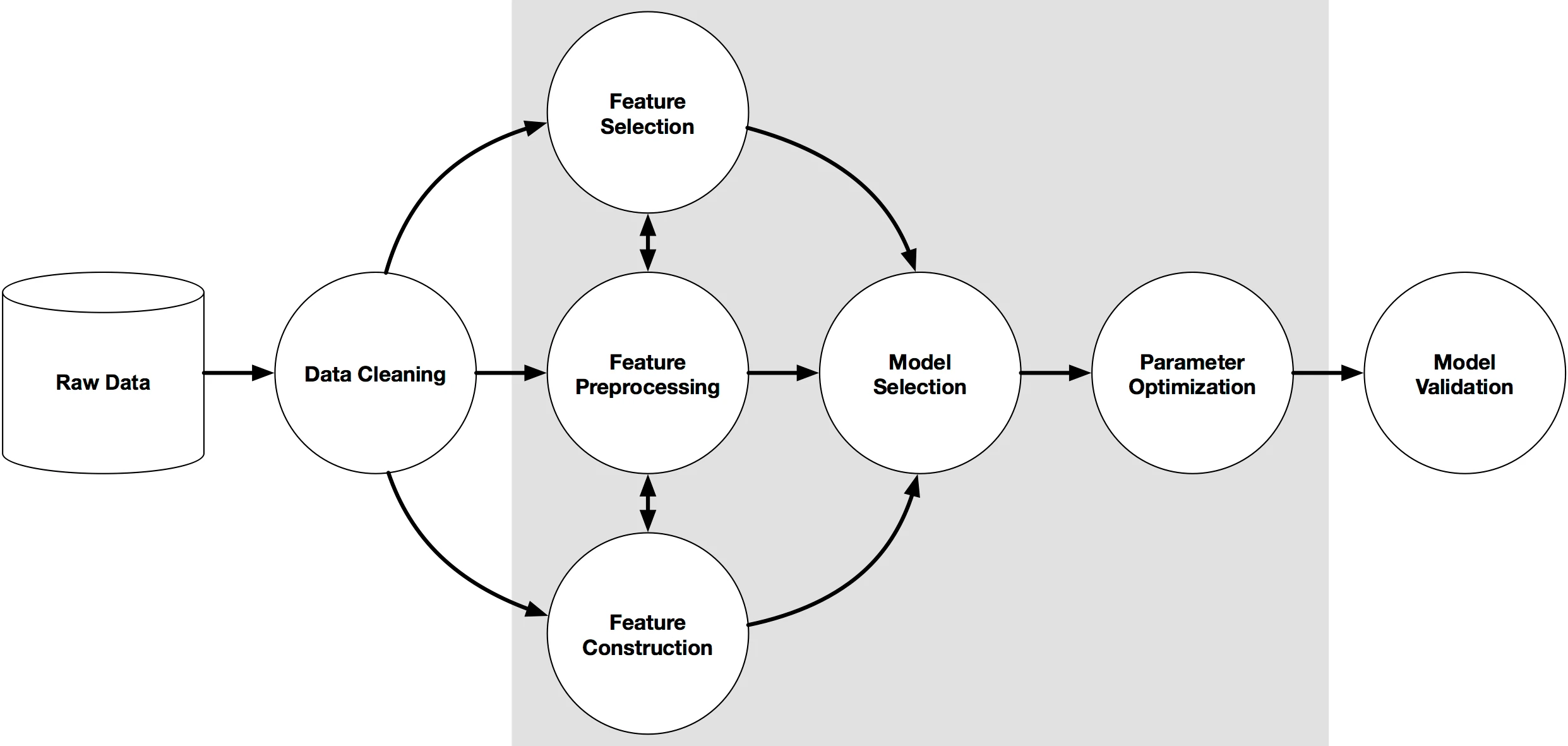

Feature Selection

Feature selection is the process of selecting a subset of relevant features for use in model construction. Feature selection is itself useful, but it mostly acts as a filter, muting out features that aren’t useful in addition to your existing features. Feature selection techniques are used for four reasons:

- simplification of models to make them easier to interpret,

- shorter training times,

- to avoid the curse of dimensionality,

- enhanced generalization by reducing overfitting

Feature selection is different from dimensionality reduction. Both methods seek to reduce the number of attributes in the dataset, but a dimensionality reduction method do so by creating new combinations of attributes, where as feature selection methods include and exclude attributes present in the data without changing them. Examples of dimensionality reduction methods include Principal Component Analysis, Singular Value Decomposition and Sammon’s Mapping.

Feature selection methods can be used to identify and remove unneeded, irrelevant and redundant attributes from data that do not contribute to the accuracy of a predictive model or may in fact decrease the accuracy of the model. Fewer attributes is desirable because it reduces the complexity of the model, and a simpler model is simpler to understand and explain.

The objective of feature selection is three-fold:

- improving the prediction performance of the predictors,

- providing faster and more cost-effective predictors,

- and providing a better understanding of the underlying process that generated the data.