Platform

- Docker Engine and Docker CLI

- Docker Compose (formerly “Fig”)

- Docker Machine

- Docker Swarm Mode

- Kitematic

- Docker Cloud (formerly “Tutum”)

- Docker Datacenter

Log into the Docker Image using the root user

You can log into the Docker Image using the root user (ID = 0) instead of the provided default user when you use the -u option.

|

|

getting logs

|

|

creating and connecting to a network

|

|

Running container on network

|

|

Docker Compose

Create docker-compose.yml file and run

|

|

Below is the example for graylog

|

|

Below is the another example for mysql

|

|

Disable a service

to disable a service in docker-compose.yml

|

|

to enable the dev profile using

|

|

|

|

Startup and shutdown order

|

|

Docker Swarm Mode

- Docker Swarm Mode ile Container Orkestrasyonu

- Deploy a stack to a swarm

- Use swarm mode routing mesh

- Administer and maintain a swarm of Docker Engines

Create machines

For development environment, create virtual machines first using docker-machine. Add “&” sign to send the process to background.

You can also use vagrant instead of docker-machine. You can create machines in amazonec2, azure, digitalocean, google etc. instead of virtualbox.

To create 3 machines with 2 CPU and 4G RAM in virtualbox, run

|

|

To list machines, run

|

|

Init and join to the swarm

To connect first virtual server, which will be manager, run

|

|

After connecting vbox-01, to start docker swarm and to make the cluster ready, run

|

|

which gives below error message

"Error response from daemon: could not choose an IP address to advertise since this system has multiple addresses on different interfaces (10.0.2.15 on eth0 and 192.168.99.100 on eth1) - specify one with --advertise-addr"

Since vbox has two ethernet interfaces, you should specify the public IP with advertise address parameter.

|

|

You should get below output:

Swarm initialized: current node (chfpci27hkjr58m4wbfhe72wz) is now a manager.

The current node is now a manager. To add a worker to this swarm, run the following command in worker nodes:

|

|

To add a manager to this swarm, run docker swarm join-token manager and follow the instructions.

To add a worker to this swarm, run docker swarm join-token worker and follow the instructions.

To connect second virtual server, which will be worker, run

|

|

To add vbox-02 as a worker, run

|

|

You can complete the same steps for vbox-03

|

|

To list nodes, you should run docker node ls command on a manager node which is vbox-01 in this example.

|

|

So now, our manager and worker nodes are ready to deploy stack

Deploy a stack to a swarm

|

|

Since there are multiple nodes, to see the service, run

|

|

to check if service is running

|

|

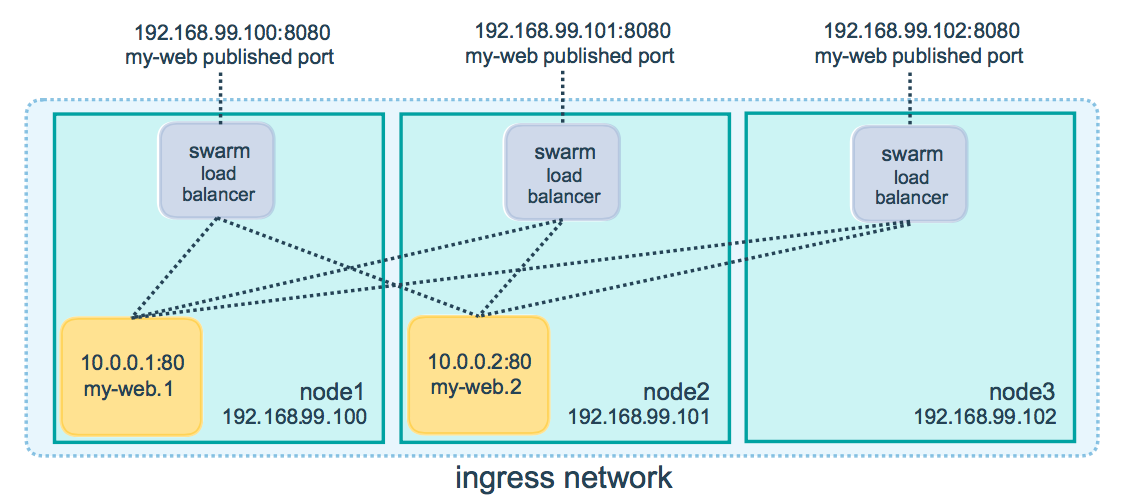

We created only one service in one node of three. However we still have an access to this service through all three nodes. This is thanks to routing mesh.

The routing mesh enables each node in the swarm to accept connections on published ports for any service running in the swarm, even if there’s no task running on the node. The routing mesh routes all incoming requests to published ports on available nodes to an active container.

Scaling

to watch and check number of replicas, which is 1/1 in our example.

|

|

to scale our service from 1 to 2

|

|

to list container and get container id

|

|

to kill the container

|

|

Once the container has been killed, container scheduler of docker swarm will create a new one and current state will be running.

Node promote/demote

to watch and list nodes

|

|

You can change the type of a node from Manager to Worker or vice versa.

|

|

There are two types of Manager Status. One is Leader and the other is Reachable. You can add more than one manager node, but only one is Leader, and others are Reachable.

The decision about how many manager nodes to implement is a trade-off between performance and fault-tolerance. Adding manager nodes to a swarm makes the swarm more fault-tolerant. However, additional manager nodes reduce write performance because more nodes must acknowledge proposals to update the swarm state. This means more network round-trip traffic.

Swarm manager nodes use the Raft Consensus Algorithm to manage the swarm state. Raft requires a majority of managers, also called the quorum, to agree on proposed updates to the swarm, such as node additions or removals.

While it is possible to scale a swarm down to a single manager node, it is impossible to demote the last manager node. Scaling down to a single manager is an unsafe operation and is not recommended. An odd number of managers is recommended, because the next even number does not make the quorum easier to keep.

Apply rolling updates and rollback to a service

Deploy an older redis

|

|

Inspect the redis service:

|

|

Update the container image for redis. The swarm manager applies the update to nodes according to the UpdateConfig policy:

|

|

Run docker service ps <SERVICE-ID> to watch the rolling update:

|

|

To rollback to previous specification

|

|

Deploy a stack to a swarm using docker-stack / docker-compose

When running Docker Engine in swarm mode, you can use docker stack deploy to deploy a complete application stack to the swarm. The deploy command accepts a stack description in the form of a Compose file.

The docker stack deploy command supports any Compose file of version “3.0” or above.

- Create the stack with

docker stack deploy:

|

|

The last argument is a name for the stack. Each network, volume and service name is prefixed with the stack name.

- Check that it’s running with

docker stack services stackdemo:

|

|

Once it’s running, you should see 1/1 under REPLICAS for both services. This might take some time if you have a multi-node swarm, as images need to be pulled.

- Bring the stack down with

docker stack rm:

|

|

- Bring the registry down with

docker service rm:

|

|

- If you’re just testing things out on a local machine and want to bring your Docker Engine out of swarm mode, use

docker swarm leave:

|

|